Готовые презентации на тему:

- Образование

- Искусство и Фото

- Наши презентации

- Авто/мото

- Технологии

- Бизнес и предпринимательство

- Карьера

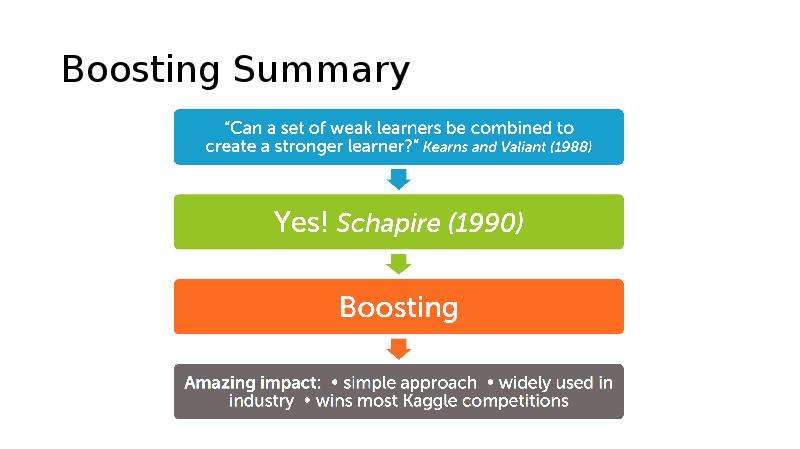

- Данные и аналитика

- Дизайн

- Устройства и комплектующие

- Экономика и Финансы

- Машиностроение

- Развлечения и Юмор

- Путешествия

- Eда

- Политика

- Юриспруденция

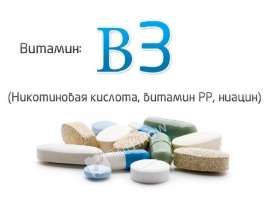

- Здоровье и Медицина

- Интернет

- Инвестиции

- Закон

- Стиль жизни

- Маркетинг

- Мобильные технологии

- Новости

- Недвижимость

- Рекрутинг

- Розничная торговля

- Таможня, ВЭД, Логистика

- Наука

- Услуги

- Программное обеспечение

- Спорт

- Музыка

- Шаблоны презентации

- Детские презентации

- Английский язык

- Астрономия

- Алгебра

- Биология

- География

- Геометрия

- История

- Литература

- Информатика

- Математика

- Обществознание

- Русский язык

- Физика

- Философия

- Химия

- МХК

- ОБЖ

- Окружающий мир

- Педагогика

- Технология

- Начальная школа

- Раскраски для детей

- Товароведение

- Менеджмент

- Страхование